Container > NHN Kubernetes Service (NKS) > User Guide

Cluster

Cluster refers to a group of instances that comprise user's Kubernetes.

Kubernetes Version Support Policy

NKS's Kubernetes version support policy is as follows.

- Support for the latest Kubernetes version

- NKS continuously delivers the latest Kubernetes version to ensure that your cluster is up to date.

- You can create a cluster with a new version or upgrade an existing cluster to a new version.

- Createable version

- The number of Kubernetes versions that allow you to create clusters remains at 4.

- Therefore, when a new available version is added, the oldest available version is removed from the available version list.

- Serviceable version

- Clusters using end-of-service versions are not guaranteed to work with new features in NKS.

- You can upgrade the Kubernetes version of your cluster with the cluster version upgrade feature in NKS.

- The number of Kubernetes versions that can be serviced remains at five.

- So when a new version is added to the createable versions, the lowest version among the serviceable versions is removed.

For each Kubernetes version, here's when you can expect to see additions/deletions to the createable versions and when versions are listed as end of service. (Note that this table is current as of September 26, 2023, and the version names of createable versions and when they are provided are subject to change due to our internal circumstances)

| Version | Add to Createable Versions | Remove from Createable Versions | End of Service Support |

|---|---|---|---|

| v1.22.3 | 2022. 01. | 2023. 05. | 2023. 08. |

| v1.23.3 | 2022. 03. | 2023. 08. | 2024. 02. |

| v1.24.3 | 2022. 09. | 2024. 02. | 2024. 05.(Scheduled) |

| v1.25.4 | 2023. 01. | 2024. 05.(Scheduled) | 2024. 08.(Scheduled) |

| v1.26.3 | 2023. 05. | 2024. 08.(Scheduled) | 2025. 02.(Scheduled) |

| v1.27.3 | 2023. 08. | 2025. 02.(Scheduled) | 2025. 05.(Scheduled) |

| v1.28.3 | 2024. 02. | 2025. 05.(Scheduled) | 2025. 08.(Scheduled) |

| v1.29.x | 2024. 05.(Scheduled) | 2025. 08.(Scheduled) | 2025. 11.(Scheduled) |

Creating Clusters

To use NHN Kubernetes Service (NKS), you must create clusters first.

[Caution] Setting up permissions to use clusters

To create a cluster, the user must have Infrastructure ADMIN or Infrastructure LoadBalancer ADMIN permissions of basic infrastructure services for the project. Only with the permissions, the user can normally create and operate clusters running on basic infrastructure services. It is totally possible to add one of the two permissions when the other is already acquired. To learn more about setting up permissions, see Manage Project Members. If there is some change to the permissions (permissions added or deleted) that were set up when creating a cluster, some features of the cluster may be restricted. For more information, see Change Cluster OWNER.

Go to Container > NHN Kubernetes Service(NKS) and click Create Cluster and a page for creating clusters shows up. The following items are required to create a cluster.

| Item | Description |

|---|---|

| Cluster Name | Name of a Kubernetes cluster. It is limited to 20 characters, and only lowercase English letters, numbers, and '-' can be entered. It must start with a lowercase letter and end with a lowercase letter or number. RFC 4122 compliant UUID formats cannot be used. |

| Kubernetes Version | Kubernetes version to use |

| VPC | VPC network to be attached to clusters |

| Subnet | Subnet to be associated with instances that comprise a cluster, among those defined in VPC |

| NCR Service Gateway | NCR Type Service Gateway (but only if your subnet is not connected to the internet gateway |

| OBS Service Gateway | OBS Type Service Gateway (but only if your subnet is not connected to the internet gateway |

| K8s Service Network | Service object CIDR for a cluster |

| Pod Network | Pod network for a cluster |

| Pod Subnet Size | Pod subnet size for a cluster |

| Kubernetes API endpoint | Public: Endpoint assigned with the domain address and associated with a floating IP Private: Endpoint set to an internal network address |

| Enhanced Security Rule | Create only required security rules when creating a worker node security group. See C True: Create only required security rules False: Create required security rules and security rules that allow all ports |

| Image | Images for instances comprising a cluster |

| Availability Zone | Area to create a default node group instance |

| Flavor | Instance specifications for a default node group |

| Node Count | Number of instances for a default node group |

| Key Pair | Key pair to access default node group |

| Block Storage Type | Type of block storage for a default node group instance |

| Block Storage Size | Size of block storage for a default node group instance |

| Additional Network | Additional network and subnet to create in a default worker node group |

[Caution] For CIDRs of VPC network subnet, K8s service network and pod network, the following constraints must be avoided. - CIDR cannot overlap with the link-local address band (169.254.0.0/16). - CIDR cannot overlap with bands of the VPC network subnet or additional network subnets, pod network, and K8s service network. - CIDR cannot overlap with the IP band (198.18.0.0/19) being used inside the NKS. - You cannot enter a CIDR block greater than /24. (The following CIDR blocks are not available: /26, /30). - For clusters of v1.23.3 or earlier, they cannot overlap with BIP (bridged IP range) (172.17.0.0/16).

You should not delete the service gateway that you set up when you created the cluster. - If the selected subnet is not connected to an internet gateway, you need to set up NCR service gateway and OBS service gateway. - These two service gateways are used to get the images/binaries required for NKS cluster configuration and basic features. - If you delete the service gateway that was set up when the cluster was created, the cluster will not work properly.

You should not change the Internet gateway connectivity for the subnet you set when you created the cluster. - The registry to receive the image/binary depends on whether the subnet you set up when creating the cluster is connected to the internet gateway or not. - If the subnet's Internet gateway connectivity changes after the cluster is created, the cluster will not work properly because it cannot connect to the set registry.

[Maximum number of nodes that can be created] The maximum number of nodes that can be created when creating a cluster is determined by the pod network, pod subnet size settings. Calculation: 2^(Pod Subnet Size - Host Bits in Pod Network) - 3 Example: - Pod subnet size = 24 - Pod network = 10.100.0.0/16 - Calculation: 2 ^ (24 - 16) - 3 = up to 253 nodes can be created

[Maximum number of IPs that can be assigned to the Pod for each node] The maximum number of IPs that can be used on a single node is determined by the Pods subnet size setting. Calculation: 2^(32 - pods_network_subnet) - 2 Example: - Pod subnet size = 24 - Calculation: 2 ^ (32 - 24) - 2 = up to 254 IPs available

[Maximum number of IPs that can be assigned to Pods in the cluster] Calculation: Maximum number of IPs that can be assigned to a Pod for each node * Maximum number of nodes that can be created Example: - Pod subnet size = 24 - Pod network = 10.100.0.0/16 - Calculate: 254 (maximum number of IPs that can be assigned to a Pod for each node) * 253 (maximum number of nodes that can be created) = 64,262 IPs available at most

NHN Kubernetes Service (NKS) supports several versions of Kubernetes. Some features may be restricted depending on the version.

| Version | Creating a new cluster | Using the created cluster |

|---|---|---|

| v1.17.6 | Unavailable | Available |

| v1.18.19 | Unavailable | Available |

| v1.19.13 | Unavailable | Available |

| v1.20.12 | Unavailable | Available |

| v1.21.6 | Unavailable | Available |

| v1.22.3 | Unavailable | Available |

| v1.23.3 | Unavailable | Available |

| v1.24.3 | Unavailable | Available |

| v1.25.4 | Available | Available |

| v1.26.3 | Available | Available |

| v1.27.3 | Available | Available |

| v1.28.3 | Available | Available |

NHN Kubernetes Service (NKS) provides different types of Container Network Interface (CNI) according its version. After 31 March 2023, CNI is created with Calico when creating a cluster with version 1.24.3 or higher. Network mode of Flannel and Calico CNI all operate in VXLAN method.

| Version | CNI Type and Version Installed when creating Cluster | CNI Change Available |

|---|---|---|

| v1.17.6 | Flannel v0.12.0 | Unavailable |

| v1.18.19 | Flannel v0.12.0 | Unavailable |

| v1.19.13 | Flannel v0.14.0 | Unavailable |

| v1.20.12 | Flannel v0.14.0 | Unavailable |

| v1.21.6 | Flannel v0.14.0 | Unavailable |

| v1.22.3 | Flannel v0.14.0 | Unavailable |

| v1.23.3 | Flannel v0.14.0 | Unavailable |

| v1.24.3 | Flannel v0.14.0 or Calico v3.24.1 1 | Conditionally Available |

| v1.25.4 | Flannel v0.14.0 or Calico v3.24.1 1 | Conditionally Available |

| v1.26.3 | Flannel v0.14.0 or Calico v3.24.1 1 | Conditionally Available |

| v1.27.3 | Calico v3.24.1 | Unavailable |

| v1.28.3 | Calico v3.24.1 | Unavailable |

Notes

- 1: Flannel is installed in clusters created before 31 March 2023. For clusters of v1.24.3 or higher created after that date, Calico is installed.

- 2: CNI change is only supported on clusters of v1.24.3 or higher, and currently changing from Flannel to Calico is only supported.

Required Security Rules for Cluster Worker Nodes

| Direction | IP protocol | Port range | Ether | Remote | Description | Considerations |

|---|---|---|---|---|---|---|

| ingress | TCP | 10250 | IPv4 | Worker node | kubelet port, direction: metrics-server(worker node) -> kubelet(worker node) | |

| ingress | TCP | 10250 | IPv4 | Master node | kubelet port, direction: kube-apiserver(NKS Control plane) -> kubelet(worker node) | |

| ingress | TCP | 5473 | IPv4 | Worker node | calico-typha port, direction: calico-node(worker node) -> calico-typha(worker node) | Created when CNI is calico |

| ingress | UDP | 8472 | IPv4 | Worker node | flannel vxlan overlay network port, direction: pod(worker node) -> pod(worker node) | Created when CNI is flannel |

| ingress | UDP | 8472 | IPv4 | Worker node | flannel vxlan overlay network port, direction: pod(NKS Control plane) -> pod(worker node) | Created when CNI is flannel |

| ingress | UDP | 4789 | IPv4 | Worker node | calico-node vxlan overlay network port, direction: pod(worker node) -> pod(worker node) | Created when CNI is calico |

| ingress | UDP | 4789 | IPv4 | Master node | Calico-node vxlan overlay network port, direction: pod(NKS Control plane) -> pod(worker node) | Created when CNI is calico |

| egress | TCP | 2379 | IPv4 | Master node | etcd port, direction: calico-kube-controller(worker node) -> etcd(NKS Control plane) | |

| egress | TCP | 6443 | IPv4 | Kubernetes API endpoint | kube-apiserver port, direction: kubelet, kube-proxy(worker node) -> kube-apiserver(NKS Control plane) | |

| egress | TCP | 6443 | IPv4 | Master node | kube-apiserver port, direction: default kubernetes service(worker node) -> kube-apiserver(NKS Control plane) | |

| egress | TCP | 5473 | IPv4 | Worker node | Created when CNI is calico, calico-typha port, direction: calico-node(worker node) -> calico-typha(worker node) | |

| egress | TCP | 53 | IPv4 | Worker node | DNS port, direction: worker node -> external | |

| egress | TCP | 443 | IPv4 | Allow all | HTTPS port, direction: worker node -> external | |

| egress | TCP | 80 | IPv4 | Allow all | HTTP port, direction: worker node -> external | |

| egress | UDP | 8472 | IPv4 | Worker node | flannel vxlan overlay network port, direction: pod(worker node) -> pod(worker node) | Created when CNI is flannel |

| egress | UDP | 8472 | IPv4 | Master node | flannel vxlan overlay network port, direction: pod (worker node) -> pod (NKS Control plane) | Created when CNI is flannel |

| egress | UDP | 4789 | IPv4 | Worker node | calico-node vxlan overlay network port, direction: pod(worker node) -> pod(worker node) | Created when CNI is calico |

| egress | UDP | 4789 | IPv4 | Master node | calico-node vxlan overlay network port, direction: pod(worker node) -> pod(NKS Control plane) | Created when CNI is calico |

| egress | UDP | 53 | IPv4 | Allow all | DNS port, direction: worker node -> external |

When using enhanced security rules, the NodePort type of service and the ports used by the NHN Cloud NAS service are not added to the security rules. You need to set the following security rules as needed.

| Direction | IP protocol | Port range | Ether | Remote | Description |

|---|---|---|---|---|---|

| ingress, egress | TCP | 30000 - 32767 | IPv4 | Allow all | NKS service object NodePort, direction: external -> worker node |

| egress | TCP | 2049 | IPv4 | NHN Cloud NAS service IP address | RPC NFS port of csi-nfs-node, direction: csi-nfs-node(worker node) -> NHN Cloud NAS service |

| egress | TCP | 111 | IPv4 | NHN Cloud NAS service IP address | rpc portmapper port of csi-nfs-node, direction: csi-nfs-node(worker node) -> NHN Cloud NAS service |

| egress | TCP | 635 | IPv4 | NHN Cloud NAS service IP address | rpc mountd port of csi-nfs-node, direction: csi-nfs-node(worker node) -> NHN Cloud NAS service |

If you don't use enhanced security rules, additional security rules are created for services of type NodePort and for external network communication.

| Direction | IP protocol | Port range | Ether | Remote | Description |

|---|---|---|---|---|---|

| ingress | TCP | 1 - 65535 | IPv4 | Worker node | All ports, direction: worker node -> worker node |

| ingress | TCP | 1 - 65535 | IPv4 | Master node | All ports, direction: NKS Control plane -> worker node |

| ingress | TCP | 30000 - 32767 | IPv4 | Allow all | NKS service object NodePort, direction: external -> worker node |

| ingress | UDP | 1 - 65535 | IPv4 | Worker node | All ports, direction: worker node -> worker node |

| ingress | UDP | 1 - 65535 | IPv4 | Master node | All ports, direction: NKS Control plane -> worker node |

| egress | Random | 1 - 65535 | IPv4 | Allow all | All ports, direction: worker node - > external |

| egress | Random | 1 - 65535 | IPv6 | Allow all | All ports, direction: worker node - > external |

Enter information as required and click Create Cluster, and a cluster begins to be created. You can check the status from the list of clusters. It takes about 10 minutes to create; more time may be required depending on the cluster configuration.

Querying Clusters

A newly created cluster can be found in the Container > NHN Kubernetes Service (NKS) page. Select a cluster and the information is displayed at the bottom.

| Item | Description |

|---|---|

| Cluster Name | Cluster name |

| Node Count | Total number of worker nodes in the cluster |

| Kubernetes Version | Kubernetes version information |

| kubeconfig file | Button to download the kubeconfig file to control the cluster |

| Task Status | Task status for the command to the cluster |

| k8s API status | Behavioral status of Kubernetes API endpoints |

| k8s Node Status | Status of Kubernetes Node resources |

The meaning of each icon of the task status is as follows.

| Icon | Meaning |

|---|---|

| Green solid icon | Normal end of task |

| Circular rotation icon | Task in progress |

| Red solid icon | Task failed |

| Gray solid icon | Cluster unavailable |

The meaning of each icon in the k8s API status is as follows:

| Icon | Meaning |

|---|---|

| Green solid icon | Working properly |

| Yellow solid icon | Information is not accurate because it nears expiration (5 minutes) |

| Red solid icon | Kubernetes API endpoint is not working properly or information expired |

The meaning of each icon in k8s Node status is as follows.

| Icon | Meaning |

|---|---|

| Green solid icon | All nodes in the cluster are in the Ready state |

| Yellow solid icon | Kubernetes API endpoints are not working properly or there are nodes in NotReady in the cluster |

| Red solid icon | All nodes in cluster are in NotReady |

When you select a cluster, cluster information appears at the bottom.

| Item | Description |

|---|---|

| Cluster Name | Name and ID of Kubernetes Cluster |

| Node Count | Number of instances of all nodes comprising a cluster |

| Kubernetes Version | Kubernetes version in service |

| CNI | Kubernetes CNI type in service |

| K8s Service Network | CIDR of the cluster'service object |

| Pod Network | Kubernetes pod network in service |

| Pod Subnet Size | Kubernetes pod subnet size in service |

| VPC | VPC network attached to cluster |

| Subnet | Subnet associated to a node instance comprising a cluster |

| API Endpoint | URI of API endpoint to access cluster for operation |

| Configuration File | Download button of configuration file required to access cluster for operation |

Deleting Clusters

Select a cluster to delete, and click Delete Clusters and it is deleted. It takes about 5 minutes to delete. More time may be required depending on the cluster status.

Change Cluster OWNER

[Notes] The cluster OWNER is set to the user who created the cluster, but can be changed to other user when required.

The cluster operates based on the OWNER permission set when it is created. The permission is used in integrating with Kubernetes and NHN Cloud basic infrastructure services. Basic infrastructure services used by Kubernetes are as follows.

| Basic Infrastructure Service | Kubernetes Integration |

|---|---|

| Compute | Use Instance service when scaling out or in worker nodes through Kubernetes Cluster Auto Scaler |

| Network | Use Load Balancer and Floating IP services when creating Kubernetes Load Balancer service. |

| Storage | Use Block Storage service when creating Kubernetes Persistent Volume |

If any of the following situations occur during operation, basic infrastructure services cannot be used in Kubernetes.

| Situation | Cause |

|---|---|

| Cluster OWNER departure from the project | Project member removal due to cluster OWNER resignation or manual project member removal |

| Change to cluster OWNER permissions | Permissions added or deleted after the cluster is created |

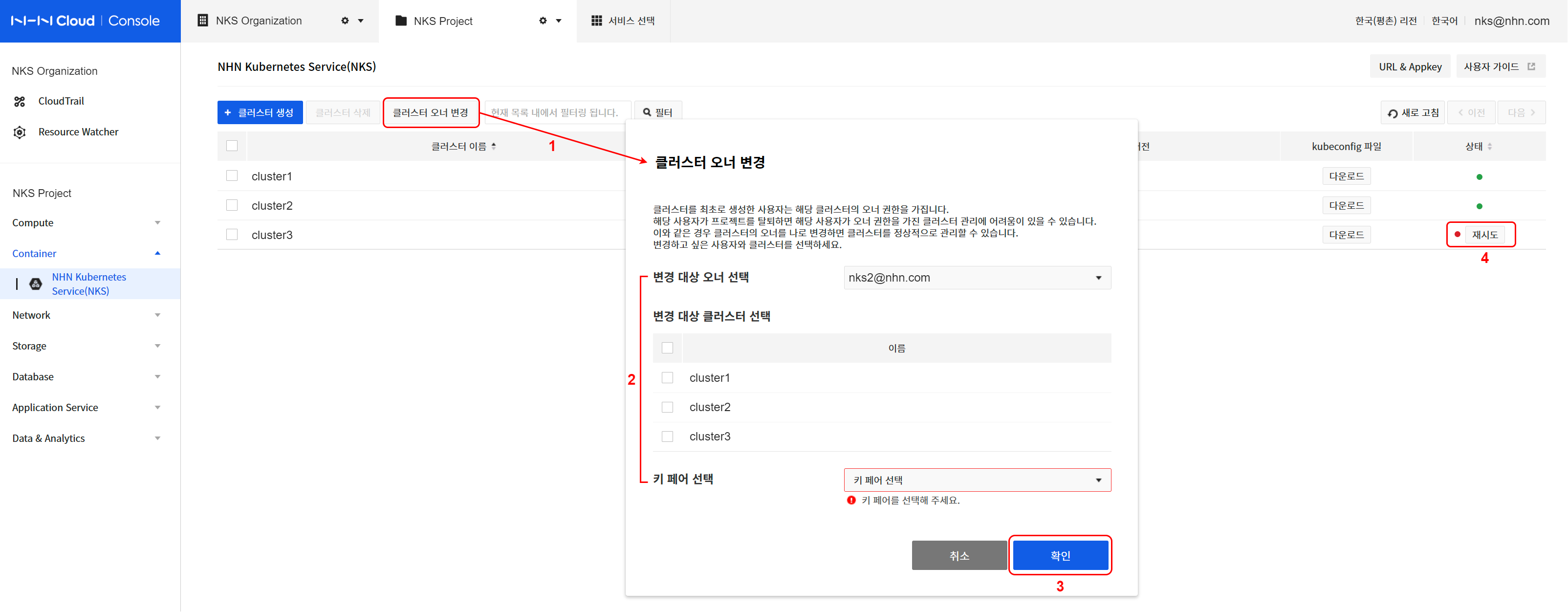

When there is a problem in operating clusters and the clusters cannot be normalized through member and permission setup due to the above reasons, you can perform normalization by changing the cluster OWNER in the NKS console. How to change the cluster OWNER is as follows.

[Notes] The user who changes the cluster OWNER in the console becomes a new cluster OWNER. The cluster after changing the cluster OWNER operates based on the new OWNER permission.

- Click Change Cluster Owner.

- Specify an owner and cluster to change.

- Specify the current owner to choose the cluster to change.

- Select a cluster to change from the listed clusters.

- Specify a key pair to use when creating a new worker node.

- Click Confirm to continue to change the owner.

- Check the status of the cluster to be changed.

- Check the progress of the task for the cluster specified from the listed clusters in the NKS console.

- The status of cluster where changing OWNER is in progress is HANDOVER_IN_PROGRESS, and when the change completes, the status turns to HANDOVER_COMPLETE.

- All node groups under the cluster also turn to the HANDOVER_* status.

- When there is a problem with the change, the status is converted to HANDOVER_FAILED, and it is not allowed to change cluster configuration until normalization completes.

- In this status, the Retry button is shown next to the status icon.

- To normalize the cluster status, click Retry and specifiy a key pair and click Confirm.

[Caution] Change cluster OWNER and key pair

Key pair resources of NHN Cloud basic infrastructure services are dependent on specific users and cannot be shared with other users. (separate from the PEM file downloaded after generating the key pair in the NHN Cloud console) Therefore, the key pair resource specified when creating the cluster must also be newly designated to belong to the new OWNER when the cluster OWNER is changed.

You can connect to the worker nodes (instances) created after changing the cluster OWNER using the newly specified key pair (PEM file). However, you still need the key pair (PEM file) of the existing OWNER to connect to the worker nodes created before the OWNER change. Therefore, even if the OWNER is changed, the existing key pair (PEM file) must be well managed at the project manager level.

Node Group

A node group is comprised of worker node instances that comprise a Kubernetes.

Querying Node Groups

Click a cluster name on the list to find the list of node groups. Select a node group and the brief information shows at the bottom.

| Item | Description |

|---|---|

| Node Group Name | Name of the node group |

| Node Count | The number of nodes in a node group |

| Kubernetes Version | Kubernetes version information applied to the node group |

| Availability Zone | Availability zone information applied to node groups |

| Flavor | Node group instance type |

| Image Type | Node group image type |

| Task Status | Task status for command to the node group |

| k8s Node Status | Status of Kubernetes Node resources belonging to a node group |

The meaning of each icon of the task status is as follows.

| Icon | Meaning |

|---|---|

| Green solid icon | Normal end of task |

| Circular rotation icon | Task in progress |

| Red solid icon | Task failed |

| Gray solid icon | Clusters and node groups unavailable |

The meaning of each icon of k8s Node status is as follows.

| Icon | Meaning |

|---|---|

| Green solid icon | All nodes in the node group are in the Ready state |

| Yellow solid icon | The Kubernetes API endpoint is not working properly or there are nodes in the NotReady status in the node group |

| Red solid icon | All nodes in the node group are in the NotReady status |

When you select a node group, node group information appears at the bottom.

- Basic Information On the Basic Information tab, you can check the following:

| Item | Description |

|---|---|

| Node Group Name | Name and ID of a node group |

| Cluster Name | Name and ID of a cluster to which a node group is included |

| Kubernetes Version | Kubernetes version in service |

| Availability Zone | Area in which a node group instance is created |

| Flavor | Specifications of a node group instance |

| Image Type | Type of image for a node group instance |

| Block Storage Size | Size of block storage for a node group instance |

| Created Date | Time when node group was created |

| Modified Date | Last time when node group was modified |

- List of Nodes Find the list of instances comprising a node group from the List of Nodes tab.

Creating Node Groups

Along with a new cluster, a default node group is created, but more node groups can be created depending on the needs. If higher specifications are required to run a container, or more worker node instances are required to scale out, node groups may be additionally created. Click Create Node Groups from the page of node group list, and the page of creating a node group shows up. The following items are required to create a node group:

| Item | Description |

|---|---|

| Availability Zone | Area to create instances comprising a cluster |

| Node Group Name | Name of an additional node group. It is limited to 20 characters, and only lowercase English letters, numbers, and '-' can be entered. It must start with a lowercase letter and end with a lowercase letter or number. RFC 4122 compliant UUID formats cannot be used. |

| Flavor | Specifications of an instance for additional node group |

| Node Count | Number of instances for additional node group |

| Key Pair | Key pair to access additional node group |

| Block Storage Type | Type of block storage of instance for additional node group |

| Block Storage Size | Size of block storage of instance for additional node group |

| Additional Network | Additional network and subnet to create in a default worker node group |

Enter information as required and click Create Node Groups, and a node group begins to be created. You may check status from the list of node groups. It takes about 5 minutes to create; more time may be required depending on the node group setting.

[Caution] Only the user who created the cluster can create node groups.

Deleting Node Groups

Select a node group to delete from the list of node groups, and click Delete Node Groups and it is deleted. It takes about 5 minutes to delete a node group; more time may be required depending on the node group status.

Adding node to node group

Nodes can be added to operating node groups. The current list of nodes will appear upon clicking the node list tab on the node group information query page. Nodes can be added by selecting the Add Node button and entering the number of nodes you want.

[Caution] Nodes cannot be manually added to node groups on which autoscaler is enabled.

Deleting node from node group

Nodes can be deleted from operating node groups. The current list of nodes will appear upon clicking the node list tab on the node group information query page. A confirmation dialog box will appear when a user selects nodes for deletion and clicks the Delete Node button. When the user confirms the node name and selects the OK button, the node will be deleted.

[Caution] Pods that were operating in the deleted node will go through force shutdown. To safely transfer pods from the currently operating nodes to be deleted to other nodes, you must run the "drain" command. New pods can be scheduled on nodes even after they are drained. To prevent new pods from being scheduled in nodes that are to be deleted, you must run the "cordon" command. For more information on safe node management, see the document below.

[Caution] Nodes cannot be manually deleted from node groups on which autoscaler is enabled.

Stop and start node

Nodes can be stopped from node groups and started again. The current list of nodes will appear upon clicking the node list tab on the node group information query page. Nodes can be stopped when a user selects nodes and click the stop button. The stopped nodes can be restarted when the user select them and click the start button.

Action process

When you stop a node that is started, the node operates in the following order.

- The node is drained

- The node is deleted from Kubernetes node resources.

- Turn the node into the SHUTDOWN status at the instance level.

If you start a stopped node, it operates in the following order. * The node becomes the ACTIVE status at the instance level. * The node is added to Kubernetes node resources again.

Constraints

Stop and start node feature has the following constraints.

- You can stop a node that is started, and can start a stopped node.

- You cannot stop all nodes from the worker node group.

- Nodes cannot be stopped from node groups on which autoscaler is enabled.

- Autoscaler cannot be enabled when the node group contains stopped nodes.

- You cannot upgrade node groups that contain stopped nodes.

Display status

The status icon is displayed according to the node status on the node list tab. The status colors are as follows.

- Green: A node in the start status

- Gray: A node in the stop status

- Red: A node in the abnormal status

Using a GPU node group

When you need to run GPU-based workloads through Kubernetes, you can create a node group composed of GPU instances.

Select the g2 type when selecting a flavor while creating the clusters or node groups to create a GPU node group.

[Note] GPU provided by NHN Cloud GPU instance is affiliated with NVIDIA. (Identify available GPU specifications that can be used) nvidia-device-plugin required for Kubernetes to use an NVIDIA GPU will be installed automatically when creating a GPU node group.

To check the default setting and run a simple operation test for the created GPU node, use the following method:

Node level status check

Access the GPU node and run the nvidia-smi command.

The GPU driver is working normally if the output shows the following:

$ nvidia-smi

Mon Jul 27 14:38:07 2020

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 418.152.00 Driver Version: 418.152.00 CUDA Version: 10.1 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

|===============================+======================+======================|

| 0 Tesla T4 Off | 00000000:00:05.0 Off | 0 |

| N/A 30C P8 9W / 70W | 0MiB / 15079MiB | 0% Default |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: GPU Memory |

| GPU PID Type Process name Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

Kubernetes level status check

Use the kubectl command to view information about available GPU resources at the cluster level.

Below are commands and execution results that displays the number of GPU cores available for each node.

$ kubectl get nodes -A -o custom-columns='NAME:.metadata.name,GPU Allocatable:.status.allocatable.nvidia\.com/gpu,GPU Capacity:.status.capacity.nvidia\.com/gpu'

NAME GPU Allocatable GPU Capacity

my-cluster-default-w-vdqxpwisjjsk-node-1 1 1

Sample workload execution for GPU testing

GPU nodes that belong to Kubernetes clusters provide resources such as nvidia.com/gpu in addition to CPU and memory.

To use GPU, enter as shown in the sample file below to be allocated with the nvidia.com/gpu resource.

- resnet.yaml

apiVersion: v1

kind: Pod

metadata:

name: resnet-gpu-pod

spec:

imagePullSecrets:

- name: nvcr.dgxkey

containers:

- name: resnet

image: nvcr.io/nvidia/tensorflow:18.07-py3

command: ["mpiexec"]

args: ["--allow-run-as-root", "--bind-to", "socket", "-np", "1", "python", "/opt/tensorflow/nvidia-examples/cnn/resnet.py", "--layers=50", "--precision=fp16", "--batch_size=64", "--num_iter=90"]

resources:

limits:

nvidia.com/gpu: 1

You should see the following results if you run the above file.

$ kubectl create -f resnet.yaml

pod/resnet-gpu-pod created

$ kubectl get pods resnet-gpu-pod

NAME READY STATUS RESTARTS AGE

resnet-gpu-pod 0/1 Running 0 17s

$ kubectl logs resnet-gpu-pod -n default -f

PY 3.5.2 (default, Nov 23 2017, 16:37:01)

[GCC 5.4.0 20160609]

TF 1.8.0

Script arguments:

--layers 50

--display_every 10

--iter_unit epoch

--batch_size 64

--num_iter 100

--precision fp16

Training

WARNING:tensorflow:Using temporary folder as model directory: /tmp/tmpjw90ypze

2020-07-31 00:57:23.020712: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:898] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2020-07-31 00:57:23.023190: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1356] Found device 0 with properties:

name: Tesla T4 major: 7 minor: 5 memoryClockRate(GHz): 1.59

pciBusID: 0000:00:05.0

totalMemory: 14.73GiB freeMemory: 14.62GiB

2020-07-31 00:57:23.023226: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1435] Adding visible gpu devices: 0

2020-07-31 00:57:23.846680: I tensorflow/core/common_runtime/gpu/gpu_device.cc:923] Device interconnect StreamExecutor with strength 1 edge matrix:

2020-07-31 00:57:23.846743: I tensorflow/core/common_runtime/gpu/gpu_device.cc:929] 0

2020-07-31 00:57:23.846753: I tensorflow/core/common_runtime/gpu/gpu_device.cc:942] 0: N

2020-07-31 00:57:23.847023: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1053] Created TensorFlow device (/job:localhost/replica:0/task:0/device:GPU:0 with 14151 MB memory) -> physical GPU (device: 0, name: Tesla T4, pci bus id: 0000:00:05.0, compute capability: 7.5)

Step Epoch Img/sec Loss LR

1 1.0 3.1 7.936 8.907 2.00000

10 10.0 68.3 1.989 2.961 1.65620

20 20.0 214.0 0.002 0.978 1.31220

30 30.0 213.8 0.008 0.979 1.00820

40 40.0 210.8 0.095 1.063 0.74420

50 50.0 211.9 0.261 1.231 0.52020

60 60.0 211.6 0.104 1.078 0.33620

70 70.0 211.3 0.340 1.317 0.19220

80 80.0 206.7 0.168 1.148 0.08820

90 90.0 210.4 0.092 1.073 0.02420

100 100.0 210.4 0.001 0.982 0.00020

[Note] To prevent workloads that do not require GPU from being allocated to GPU nodes, see Taints and Tolerations.

Autoscaler

Autoscaler is a feature that automatically adjusts the number of nodes when a node group does not have enough resources available to schedule pods or when the node's utilization remains below a certain level. Autoscaler can be set for each node group and operates independently of each other. This feature is based on the cluster-autoscaler feature, an officially supported feature of the Kubernetes project. For more information, refer to Cluster Autoscaler.

[Note] The version of the

cluster-autoscalerapplied to NHN Kubernetes Service (NKS) is1.19.0.

Glossary

Terms used in relation to the autoscaler and their meanings are as follows:

| Term | Meaning |

|---|---|

| Scale Up | Increase a number of nodes. |

| Scaling Down | Decrease a number of nodes. |

[Caution] If worker nodes are working in an environment with no Internet connection, autoscaler container images must be manually installed on the worker nodes. This task is required for the following targets:

- Pangyo Region: Node groups created before November 24, 2020

- Pyeongchon Region: Node groups created before November 19, 2020

The container image path of autoscaler is as follows:

- k8s.gcr.io/autoscaling/cluster-autoscaler:v1.19.0

Autoscaler Settings

Autoscaler is set and operated for each node group. Autoscaler can be set up in various ways, as listed below:

- Set up on the default node groups when creating a cluster.

- Set up on additional node groups when adding the node groups.

- Set up on existing node groups.

Activating the autoscaler enables the following options:

| Settings Item | Meaning | Valid Range | Default | Unit |

|---|---|---|---|---|

| Minimum Node Count | Minimum number of nodes that can be scaled down | 1-10 | 1 | unit |

| Maximum Node Count | Maximum number of nodes that can be scaled up | 1-10 | 10 | unit |

| Scaling Down | Enable/Disable Node Scale-down | Enable/Disable | Enable | - |

| Scale Down Utilization Threshold | Reference value to determine resource usage threshold range for scale-down | 1-100 | 50 | % |

| Scale Down Unneeded Time | The duration for retaining resource usage of target nodes to scale down below the threshold | 1-1440 | 10 | minutes |

| Scale Down Delay After Add | Delay before starting to monitor for scale-down targets after scaling up | 10-1440 | 10 | minutes |

[Caution] Nodes cannot be manually added to or deleted from node groups on which autoscaler is enabled.

Scale-up/down Conditions

Scales up if all of the following conditions are met:

- There are no more available nodes to schedule pods.

- The current number of nodes is below the maximum limit.

Scales down if all of the following conditions are met:

- Nodes use resources below the threshold for the set critical area duration.

- The current number of nodes exceeds the minimum limit.

If some nodes contain at least one pod that meets the following conditions, then they will be excluded from the list of nodes to be scaled down:

- Pods restricted by "PodDisruptionBudget"

- Pods in the "kube-system" namespace

- Pods not started by control objects such as "deployment" or "replicaset"

- Pods that use the local storage

- Pods that cannot be moved to other nodes because of the restrictions such as "node selector"

For more information on the conditions for scale-up/down, see Cluster Autoscaler FAQ.

Operation Example

Let's check how the autoscaler work through the following example:

1. Enabling Autoscaler

Enables autoscaling on the default node group of the cluster you want. For this example, the number of nodes for the default group has been set to 1 and autoscaler settings are configured as follows:

| Settings Item | Value |

|---|---|

| Minimum Node Count | 1 |

| Maximum Node Count | 5 |

| Scaling Down | Enable |

| Scale Down Utilization Threshold | 50 |

| Scale Down Unneeded Time | 3 |

| Scale Down Delay After Add | 10 |

2. Deploying Pods

Deploy pods using the following manifest:

[Caution] Just like this manifest, all manifests must have a container resource request defined on them.

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

replicas: 15

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

resources:

requests:

cpu: "100m"

Because the total amount of CPU resources for the requested pods is bigger than the resources that a single node can handle, some of the pods are left behind in the Pending status, as shown below. In this case, the nodes will scale up.

# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-deployment-756fd4cdf-5gftm 1/1 Running 0 34s

nginx-deployment-756fd4cdf-64gtv 0/1 Pending 0 34s

nginx-deployment-756fd4cdf-7bsst 0/1 Pending 0 34s

nginx-deployment-756fd4cdf-8892p 1/1 Running 0 34s

nginx-deployment-756fd4cdf-8k4cc 1/1 Running 0 34s

nginx-deployment-756fd4cdf-cprp7 0/1 Pending 0 34s

nginx-deployment-756fd4cdf-cvs97 1/1 Running 0 34s

nginx-deployment-756fd4cdf-h7ftk 1/1 Running 0 34s

nginx-deployment-756fd4cdf-hv2fz 0/1 Pending 0 34s

nginx-deployment-756fd4cdf-j789l 0/1 Pending 0 34s

nginx-deployment-756fd4cdf-jrkfj 0/1 Pending 0 34s

nginx-deployment-756fd4cdf-m887q 0/1 Pending 0 34s

nginx-deployment-756fd4cdf-pvnfc 0/1 Pending 0 34s

nginx-deployment-756fd4cdf-wrj8b 1/1 Running 0 34s

nginx-deployment-756fd4cdf-x7ns5 0/1 Pending 0 34s

3. Checking Node Scale-up

The following is the node list before scale-up:

# kubectl get nodes

NAME STATUS ROLES AGE VERSION

autoscaler-test-default-w-ohw5ab5wpzug-node-0 Ready <none> 45m v1.23.3

After 5-10 minutes, the nodes should be scaled up as shown below:

# kubectl get nodes

NAME STATUS ROLES AGE VERSION

autoscaler-test-default-w-ohw5ab5wpzug-node-0 Ready <none> 48m v1.23.3

autoscaler-test-default-w-ohw5ab5wpzug-node-1 Ready <none> 77s v1.23.3

autoscaler-test-default-w-ohw5ab5wpzug-node-2 Ready <none> 78s v1.23.3

The pods that were in Pending status are now normally scheduled after the scale-up.

# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-deployment-756fd4cdf-5gftm 1/1 Running 0 4m29s 10.100.8.13 autoscaler-test-default-w-ohw5ab5wpzug-node-0 <none> <none>

nginx-deployment-756fd4cdf-64gtv 1/1 Running 0 4m29s 10.100.22.5 autoscaler-test-default-w-ohw5ab5wpzug-node-1 <none> <none>

nginx-deployment-756fd4cdf-7bsst 1/1 Running 0 4m29s 10.100.22.4 autoscaler-test-default-w-ohw5ab5wpzug-node-1 <none> <none>

nginx-deployment-756fd4cdf-8892p 1/1 Running 0 4m29s 10.100.8.10 autoscaler-test-default-w-ohw5ab5wpzug-node-0 <none> <none>

nginx-deployment-756fd4cdf-8k4cc 1/1 Running 0 4m29s 10.100.8.12 autoscaler-test-default-w-ohw5ab5wpzug-node-0 <none> <none>

nginx-deployment-756fd4cdf-cprp7 1/1 Running 0 4m29s 10.100.12.7 autoscaler-test-default-w-ohw5ab5wpzug-node-2 <none> <none>

nginx-deployment-756fd4cdf-cvs97 1/1 Running 0 4m29s 10.100.8.14 autoscaler-test-default-w-ohw5ab5wpzug-node-0 <none> <none>

nginx-deployment-756fd4cdf-h7ftk 1/1 Running 0 4m29s 10.100.8.11 autoscaler-test-default-w-ohw5ab5wpzug-node-0 <none> <none>

nginx-deployment-756fd4cdf-hv2fz 1/1 Running 0 4m29s 10.100.12.5 autoscaler-test-default-w-ohw5ab5wpzug-node-2 <none> <none>

nginx-deployment-756fd4cdf-j789l 1/1 Running 0 4m29s 10.100.22.6 autoscaler-test-default-w-ohw5ab5wpzug-node-1 <none> <none>

nginx-deployment-756fd4cdf-jrkfj 1/1 Running 0 4m29s 10.100.12.4 autoscaler-test-default-w-ohw5ab5wpzug-node-2 <none> <none>

nginx-deployment-756fd4cdf-m887q 1/1 Running 0 4m29s 10.100.22.3 autoscaler-test-default-w-ohw5ab5wpzug-node-1 <none> <none>

nginx-deployment-756fd4cdf-pvnfc 1/1 Running 0 4m29s 10.100.12.6 autoscaler-test-default-w-ohw5ab5wpzug-node-2 <none> <none>

nginx-deployment-756fd4cdf-wrj8b 1/1 Running 0 4m29s 10.100.8.15 autoscaler-test-default-w-ohw5ab5wpzug-node-0 <none> <none>

nginx-deployment-756fd4cdf-x7ns5 1/1 Running 0 4m29s 10.100.12.3 autoscaler-test-default-w-ohw5ab5wpzug-node-2 <none> <none>

You can check scale-up events with the following command:

# kubectl get events --field-selector reason="TriggeredScaleUp"

LAST SEEN TYPE REASON OBJECT MESSAGE

4m Normal TriggeredScaleUp pod/nginx-deployment-756fd4cdf-64gtv pod triggered scale-up: [{default-worker-bf5999ab 1->3 (max: 5)}]

4m Normal TriggeredScaleUp pod/nginx-deployment-756fd4cdf-7bsst pod triggered scale-up: [{default-worker-bf5999ab 1->3 (max: 5)}]

...

4. Checking Node Scale-down after Deleting Pods

Deleting the current deployments also deletes the deployed pods.

# kubectl get pods

NAME READY STATUS RESTARTS AGE

nginx-deployment-756fd4cdf-5gftm 0/1 Terminating 0 20m

nginx-deployment-756fd4cdf-64gtv 0/1 Terminating 0 20m

nginx-deployment-756fd4cdf-7bsst 0/1 Terminating 0 20m

nginx-deployment-756fd4cdf-8892p 0/1 Terminating 0 20m

nginx-deployment-756fd4cdf-8k4cc 0/1 Terminating 0 20m

nginx-deployment-756fd4cdf-cprp7 0/1 Terminating 0 20m

nginx-deployment-756fd4cdf-h7ftk 0/1 Terminating 0 20m

nginx-deployment-756fd4cdf-hv2fz 0/1 Terminating 0 20m

nginx-deployment-756fd4cdf-j789l 0/1 Terminating 0 20m

nginx-deployment-756fd4cdf-jrkfj 0/1 Terminating 0 20m

nginx-deployment-756fd4cdf-m887q 0/1 Terminating 0 20m

nginx-deployment-756fd4cdf-pvnfc 0/1 Terminating 0 20m

nginx-deployment-756fd4cdf-wrj8b 0/1 Terminating 0 20m

nginx-deployment-756fd4cdf-x7ns5 0/1 Terminating 0 20m

#

# kubectl get pods

No resources found in default namespace.

#

After a while, you can see nodes are scaled down to 1. The time it takes to scale down may vary depending on your settings.

# kubectl get nodes

NAME STATUS ROLES AGE VERSION

autoscaler-test-default-w-ohw5ab5wpzug-node-0 Ready <none> 71m v1.23.3

You can check scale-down events with the following command:

# kubectl get events --field-selector reason="ScaleDown"

LAST SEEN TYPE REASON OBJECT MESSAGE

13m Normal ScaleDown node/autoscaler-test-default-w-ohw5ab5wpzug-node-1 node removed by cluster autoscaler

13m Normal ScaleDown node/autoscaler-test-default-w-ohw5ab5wpzug-node-2 node removed by cluster autoscaler

You can check the status of each node group's autoscaler through configmap/cluster-autoscaler-status. Configmaps are created in different namespaces per node group. The following is the naming convention for namespace per node group created by the autoscaler:

- Format: nhn-ng-{node group name}

- Enter a node group name in {node group name}.

- The default node group name is "default-worker."

The status of the default node group's autoscaler can be checked using the following method. For more information, see Cluster Autoscaler FAQ.

# kubectl get configmap/cluster-autoscaler-status -n nhn-ng-default-worker -o yaml

apiVersion: v1

data:

status: |+

Cluster-autoscaler status at 2020-11-03 12:39:12.190150095 +0000 UTC:

Cluster-wide:

Health: Healthy (ready=1 unready=0 notStarted=0 longNotStarted=0 registered=1 longUnregistered=0)

LastProbeTime: 2020-11-03 12:39:12.185954244 +0000 UTC m=+43.664545435

LastTransitionTime: 2020-11-03 12:38:41.705407217 +0000 UTC m=+13.183998415

ScaleUp: NoActivity (ready=1 registered=1)

LastProbeTime: 2020-11-03 12:39:12.185954244 +0000 UTC m=+43.664545435

LastTransitionTime: 2020-11-03 12:38:41.705407217 +0000 UTC m=+13.183998415

ScaleDown: NoCandidates (candidates=0)

LastProbeTime: 2020-11-03 12:39:12.185954244 +0000 UTC m=+43.664545435

LastTransitionTime: 2020-11-03 12:38:41.705407217 +0000 UTC m=+13.183998415

NodeGroups:

Name: default-worker-f9a9ee5e

Health: Healthy (ready=1 unready=0 notStarted=0 longNotStarted=0 registered=1 longUnregistered=0 cloudProviderTarget=1 (minSize=1, maxSize=5))

LastProbeTime: 2020-11-03 12:39:12.185954244 +0000 UTC m=+43.664545435

LastTransitionTime: 2020-11-03 12:38:41.705407217 +0000 UTC m=+13.183998415

ScaleUp: NoActivity (ready=1 cloudProviderTarget=1)

LastProbeTime: 2020-11-03 12:39:12.185954244 +0000 UTC m=+43.664545435

LastTransitionTime: 2020-11-03 12:38:41.705407217 +0000 UTC m=+13.183998415

ScaleDown: NoCandidates (candidates=0)

LastProbeTime: 2020-11-03 12:39:12.185954244 +0000 UTC m=+43.664545435

LastTransitionTime: 2020-11-03 12:38:41.705407217 +0000 UTC m=+13.183998415

kind: ConfigMap

metadata:

annotations:

cluster-autoscaler.kubernetes.io/last-updated: 2020-11-03 12:39:12.190150095 +0000

UTC

creationTimestamp: "2020-11-03T12:38:28Z"

name: cluster-autoscaler-status

namespace: nhn-ng-default-worker

resourceVersion: "1610"

selfLink: /api/v1/namespaces/nhn-ng-default-worker/configmaps/cluster-autoscaler-status

uid: e72bd1a2-a56f-41b4-92ee-d11600386558

[Note] As for the status information, the

Cluster-widearea has the same information asNodeGroups.

Example of an action working with HPA (HorizontalPodAutoscale)

Horizontal Pod Autoscaler (HPA) observes resource usage, such as CPU usage, to auto-scale the number of pods of ReplicationController, Deployment, ReplicaSet, and StatefulSet. As the number of pods is adjusted, there could be too many or too few resources available in the node. At this moment, utilize the Autoscaler feature to increase or decrease the number of nodes. In this example, we will see how HPA and Autoscaler can work together to deal with this issue. For more information on HPA, see Horizontal Pod Autoscaler.

1. Enabling Autoscaler

Enable Autoscaler as shown in the above example.

2. Configuring HPA

Deploy a container that creates CPU load for a certain amount of time after receiving a web request. The, expose the service. The following is taken from the php-apache.yaml file.

apiVersion: apps/v1

kind: Deployment

metadata:

name: php-apache

spec:

selector:

matchLabels:

run: php-apache

replicas: 1

template:

metadata:

labels:

run: php-apache

spec:

containers:

- name: php-apache

image: k8s.gcr.io/hpa-example

ports:

- containerPort: 80

resources:

limits:

cpu: 500m

requests:

cpu: 200m

---

apiVersion: v1

kind: Service

metadata:

name: php-apache

labels:

run: php-apache

spec:

ports:

- port: 80

selector:

run: php-apache

# kubectl apply -f php-apache.yaml

deployment.apps/php-apache created

service/php-apache created

Now, set up HPA. For the php-apache deployment object just created in the previous step, set as follows: min pod count = 1, max pod count = 30, target CPU load = 50%.

# kubectl autoscale deployment php-apache --cpu-percent=50 --min=1 --max=30

horizontalpodautoscaler.autoscaling/php-apache autoscaled

If you look up the state of HPA, you can see the settings and the current state. Since no web request that causes CPU load has been sent yet, CPU load is still at 0%.

# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

php-apache Deployment/php-apache 0%/50% 1 30 1 80s

3. Authorizing Load

Now run the pod that triggers load in the new terminal. This pod sends web requests without stopping. You can stop this requesting action with Ctrl+C.

# kubectl run -i --tty load-generator --rm --image=busybox --restart=Never -- /bin/sh -c "while sleep 0.01; do wget -q -O- http://php-apache; done"

If you don't see a command prompt, try pressing enter.

OK!OK!OK!OK!OK!OK!OK!

Using the kubectl top nodes command, you can see the current resource usage of the node. You can observe the increase in CPU load as time goes after running the pod that causes load.

# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

autoscaler-test-default-w-ohw5ab5wpzug-node-0 66m 6% 1010Mi 58%

(A moment later)

# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

autoscaler-test-default-w-ohw5ab5wpzug-node-0 574m 57% 1013Mi 58%

If you look up the status of HPA, you can see the increase in CPU load and the resultant increase in REPLICAS (number of pods).

# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

php-apache Deployment/php-apache 250%/50% 1 30 5 2m44s

4. Checking the operation of Autoscaler

If you look up pods, due to the increase in the number of pods, you can see some pods are running as they are scheduled to node-0, but some are still pending

# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

load-generator 1/1 Running 0 2m 10.100.8.39 autoscaler-test-default-w-ohw5ab5wpzug-node-0 <none> <none>

php-apache-79544c9bd9-6f7nm 0/1 Pending 0 65s <none> <none> <none> <none>

php-apache-79544c9bd9-82xkn 1/1 Running 0 80s 10.100.8.41 autoscaler-test-default-w-ohw5ab5wpzug-node-0 <none> <none>

php-apache-79544c9bd9-cjj9q 0/1 Pending 0 80s <none> <none> <none> <none>

php-apache-79544c9bd9-k6nnt 1/1 Running 0 4m27s 10.100.8.38 autoscaler-test-default-w-ohw5ab5wpzug-node-0 <none> <none>

php-apache-79544c9bd9-mplnn 0/1 Pending 0 19s <none> <none> <none> <none>

php-apache-79544c9bd9-t2knw 1/1 Running 0 80s 10.100.8.40 autoscaler-test-default-w-ohw5ab5wpzug-node-0 <none> <none>

This situation of not being able to schedule pods is the node extension condition for Autoscaler. If you look up the state information provided by Cluster Autoscaler pod, you can see that ScaleUp is in InProgress state.

# kubectl get cm/cluster-autoscaler-status -n nhn-ng-default-worker -o yaml

apiVersion: v1

data:

status: |+

Cluster-autoscaler status at 2020-11-24 13:00:40.210137143 +0000 UTC:

Cluster-wide:

Health: Healthy (ready=1 unready=0 notStarted=0 longNotStarted=0 registered=1 longUnregistered=0)

LastProbeTime: 2020-11-24 13:00:39.930763305 +0000 UTC m=+1246178.729396969

LastTransitionTime: 2020-11-10 02:51:14.353177175 +0000 UTC m=+13.151810823

ScaleUp: InProgress (ready=1 registered=1)

LastProbeTime: 2020-11-24 13:00:39.930763305 +0000 UTC m=+1246178.729396969

LastTransitionTime: 2020-11-24 12:58:34.83642035 +0000 UTC m=+1246053.635054003

ScaleDown: NoCandidates (candidates=0)

LastProbeTime: 2020-11-24 13:00:39.930763305 +0000 UTC m=+1246178.729396969

LastTransitionTime: 2020-11-20 01:42:32.287146552 +0000 UTC m=+859891.085780205

NodeGroups:

Name: default-worker-bf5999ab

Health: Healthy (ready=1 unready=0 notStarted=0 longNotStarted=0 registered=1 longUnregistered=0 cloudProviderTarget=2 (minSize=1, maxSize=3))

LastProbeTime: 2020-11-24 13:00:39.930763305 +0000 UTC m=+1246178.729396969

LastTransitionTime: 2020-11-10 02:51:14.353177175 +0000 UTC m=+13.151810823

ScaleUp: InProgress (ready=1 cloudProviderTarget=2)

LastProbeTime: 2020-11-24 13:00:39.930763305 +0000 UTC m=+1246178.729396969

LastTransitionTime: 2020-11-24 12:58:34.83642035 +0000 UTC m=+1246053.635054003

ScaleDown: NoCandidates (candidates=0)

LastProbeTime: 2020-11-24 13:00:39.930763305 +0000 UTC m=+1246178.729396969

LastTransitionTime: 2020-11-20 01:42:32.287146552 +0000 UTC m=+859891.085780205

...

After a while, you can see another node (node-8) has been added.

# kubectl get nodes

NAME STATUS ROLES AGE VERSION

autoscaler-test-default-w-ohw5ab5wpzug-node-0 Ready <none> 22d v1.23.3

autoscaler-test-default-w-ohw5ab5wpzug-node-8 Ready <none> 90s v1.23.3

You can see all pods that were in Pending state are properly scheduled and now in the Running state.

# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

load-generator 1/1 Running 0 5m32s 10.100.8.39 autoscaler-test-default-w-ohw5ab5wpzug-node-0 <none> <none>

php-apache-79544c9bd9-6f7nm 1/1 Running 0 4m37s 10.100.42.3 autoscaler-test-default-w-ohw5ab5wpzug-node-8 <none> <none>

php-apache-79544c9bd9-82xkn 1/1 Running 0 4m52s 10.100.8.41 autoscaler-test-default-w-ohw5ab5wpzug-node-0 <none> <none>

php-apache-79544c9bd9-cjj9q 1/1 Running 0 4m52s 10.100.42.5 autoscaler-test-default-w-ohw5ab5wpzug-node-8 <none> <none>

php-apache-79544c9bd9-k6nnt 1/1 Running 0 7m59s 10.100.8.38 autoscaler-test-default-w-ohw5ab5wpzug-node-0 <none> <none>

php-apache-79544c9bd9-mplnn 1/1 Running 0 3m51s 10.100.42.4 autoscaler-test-default-w-ohw5ab5wpzug-node-8 <none> <none>

php-apache-79544c9bd9-t2knw 1/1 Running 0 4m52s 10.100.8.40 autoscaler-test-default-w-ohw5ab5wpzug-node-0 <none> <none>

If you press Ctrl+C to stop the pod (load-generator) that was executed for load, load will decrease after a while. The lower the load, the lower the CPU usage occupied by the pod, which in return decrease the number of pods.

# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

php-apache Deployment/php-apache 0%/50% 1 30 1 31m

As the number of pods decreases, the resource usage of the node also decreases, which results in the reduction in nodes. You can see the newly added node-8 has been reduced.

# kubectl get nodes

NAME STATUS ROLES AGE VERSION

autoscaler-test-default-w-ohw5ab5wpzug-node-0 Ready <none> 22d v1.23.3

User Script (old)

You can register a user script when creating clusters and additional node groups. A user script has the following features.

- Feature setting

- This feature can be set by worker node group.

- A user script entered when creating clusters is applied to the default worker node group.

- A user script entered when creating additional node groups is applied to the corresponding worker node group.

- The content of a user script cannot be changed after the worker node group is created.

- Script execution time

- A user script is executed during the instance initialization process while initializing the worker node.

- After the user script has been executed, it sets and registers the instance as the worker node of the 'worker node group'.

- Script content

- The first line of a user script must start with #!.

- The maximum size of script is 64KB.

- The script is run with the root permission.

- The script execution records are stored in the following location.

- Script exit code:

/var/log/userscript.exitcode - Standard output and standard error streams of script:

/var/log/userscript.output

- Script exit code:

User Script

The features of a new version of user script are included in the node groups created after July 26, 2022. The following features are found in the new version.

- You can change the user script content after the worker node group is created.

- However, the changes are applied only to nodes created after the user script change.

-

The script execution records are stored in the following location.

- Script exit code:

/var/log/userscript_v2.exitcode - Standard output and standard error streams of scrip:

/var/log/userscript_v2.output

- Script exit code:

-

Correlations with the old version

- Features of the new version replace those of the old version.

- The user script set when creating node groups through the console and API is configured for the new version.

- For the worker node group that set the old version of a user script, the old version and new version features work separately.

- You cannot change the user script content set in the old version.

- You can change the user script content set in the new version.

- If user scripts are set in the old version and the new version respectively, they are executed in the following order.

- The old version of a user script

- The new version of a user script

- Features of the new version replace those of the old version.

Change Instance Flavor

Change the instance flavor of a worker node group. The instance flavors of all worker nodes in a worker node group are changed.

Process

Changing the instance flavor proceeds in the following order.

- Deactivate the cluster auto scaler feature.

- Add a buffer node to the worker node group.

- Perform the following tasks for all worker nodes within the worker node group:

- Evict the working pods from the worker node, and make the nodes not schedulable.

- Change the instance flavor of a worker node.

- Make the nodes schedulable.

- Evict working pods from the buffer node, and delete the buffer node.

- Reactivate the cluster auto scaler feature.

Instance flavor changes work in a similar way to worker component upgrades. For more details on creating and deleting buffer nodes and evicting pods, see Upgrade a Cluster.

Constraints

You can only change an instance to another flavor that is compatible with its current flavor.

- m2, c2, r2, t2, x1 flavor instances can be changed to m2, c2, r2, t2, x1 flavors.

- m2, c2, r2, t2, x1 flavor instances cannot be changed to u2 flavors.

- u2 flavor instances cannot be changed to other flavors once they have been created, not even to those of the same u2 flavor.

Use Custom Image as Worker Image

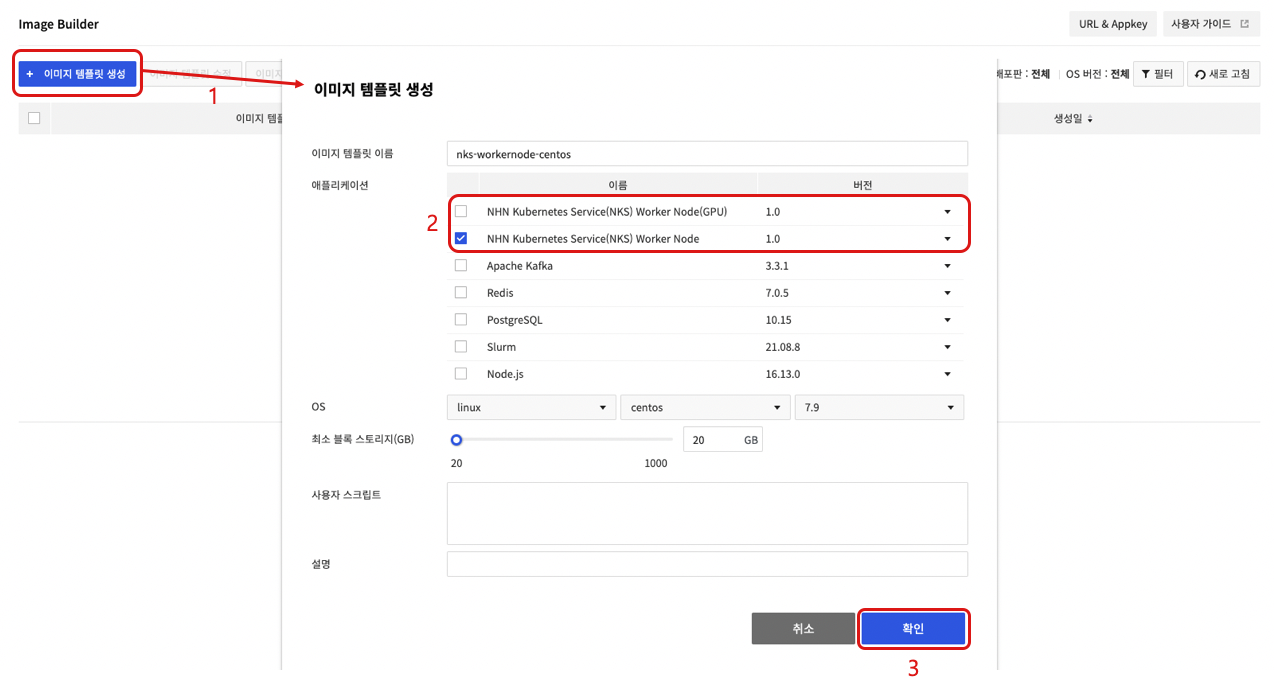

You can create a worker node group using your custom images. This requires additional work (conversion to NKS worker node) in NHN Cloud Image Builder so that the custom image can be used as a worker node image. In Image Builder, you can create custom worker node images by creating image templates with the worker node application of NHN Kubernetes Service (NKS). For more information on Image Builder, see Image Builder User Guide.

[Caution] Conversion to NKS worker node involves installing packages and changing settings, so if you work with images that don't work properly, it may fail. You may be charged for using the Image Builder service.

Constraints

Only custom images created based on NHN Cloud instances can be used as worker node images. This feature is only available for specific instance images. You must select the correct version of application for the conversion wok to match the image of the base instance you are creating your custom image from. See the table below for information on the application version to choose for each instance image.

| OS | Image | Application name |

|---|---|---|

| CentOS | CentOS 7.9 (2022.11.22) | 1.0 |

| CentOS 7.9 (2023.05.25) | 1.1 | |

| CentOS 7.9 (2023.08.22) | 1.2 | |

| CentOS 7.9 (2023.11.21) | 1.3 | |

| Rocky Linux 8.9 (2024.02.20) | 1.4 | |

| Rocky | Rocky Linux 8.6 (2023.03.21) | 1.0 |

| Rocky Linux 8.7 (2023.05.25) | 1.1 | |

| Rocky Linux 8.8 (2023.08.22) | 1.2 | |

| Rocky Linux 8.8 (2023.11.21) | 1.3 | |

| Rocky Linux 8.9 (2024.02.20) | 1.4 | |

| Ubuntu | Ubuntu Server 18.04.6 LTS (2023.03.21) | 1.0 |

| Ubuntu Server 20.04.6 LTS (2023.05.25) | 1.1 | |

| Ubuntu Server 20.04.6 LTS (2023.08.22) | 1.2 | |

| Ubuntu Server 20.04.6 LTS (2023.11.21) | 1.3 | |

| Ubuntu Server 22.04.3 LTS (2023.11.21) | 1.3 | |

| Ubuntu Server 20.04.6 LTS (2024.02.20) | 1.4 | |

| Ubuntu Server 22.04.3 LTS (2024.02.20) | 1.4 | |

| Debian | Debian 11.6 Bullseye (2023.03.21) | 1.0 |

| Debian 11.6 Bullseye (2023.05.25) | 1.1 | |

| Debian 11.7 Bullseye (2023.08.22) | 1.2 | |

| Debian 11.8 Bullseye (2023.11.21) | 1.3 | |

| Debian 11.8 Bullseye (2024.02.20) | 1.4 |

[Notes] During the process of converting a custom image to a worker node image, GPU drivers are installed according to the options selected. So even if you create a custom GPU worker node image, you don't need to create a custom image with a GPU instance.

Process

To use a custom image as a worker node image, perform the following process in the Image Builder service.

- Click Create Image Template.

- After selecting the application, write the image template name , OS , minimum block storage (GB), user script, and description.

- For a group of worker nodes that do not use GPU Flavor, choose the NHN Kubernetes Service (NKS) Worker Node application.

- For a group of worker nodes using GPU Flavor, select NHN Kubernetes Service (NKS) Worker Node (GPU) Application.

- Click Confirm to create a image template.

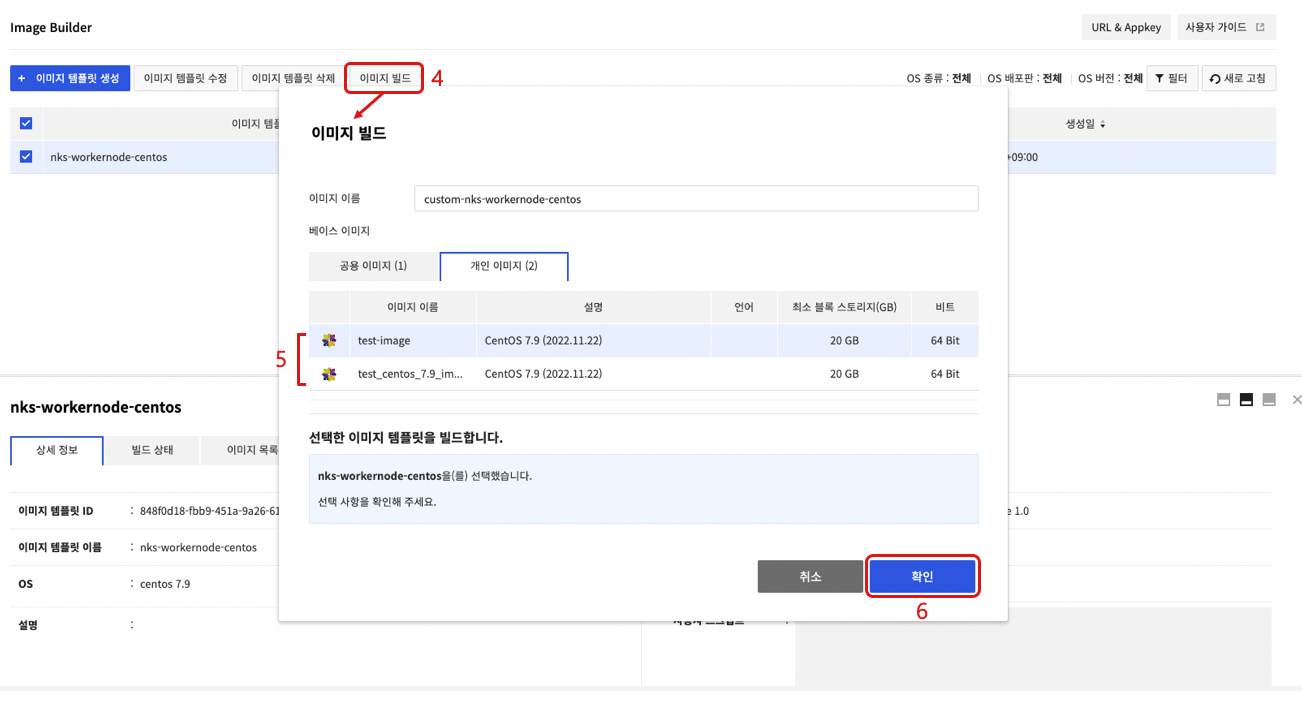

- Select the created image template and choose Build Image.

- On the Build Image screen, select the Private Image tab and select a custom image to convert to a worker node.

- Click Confirm to create a new image after conversion to NKS worker node completes.

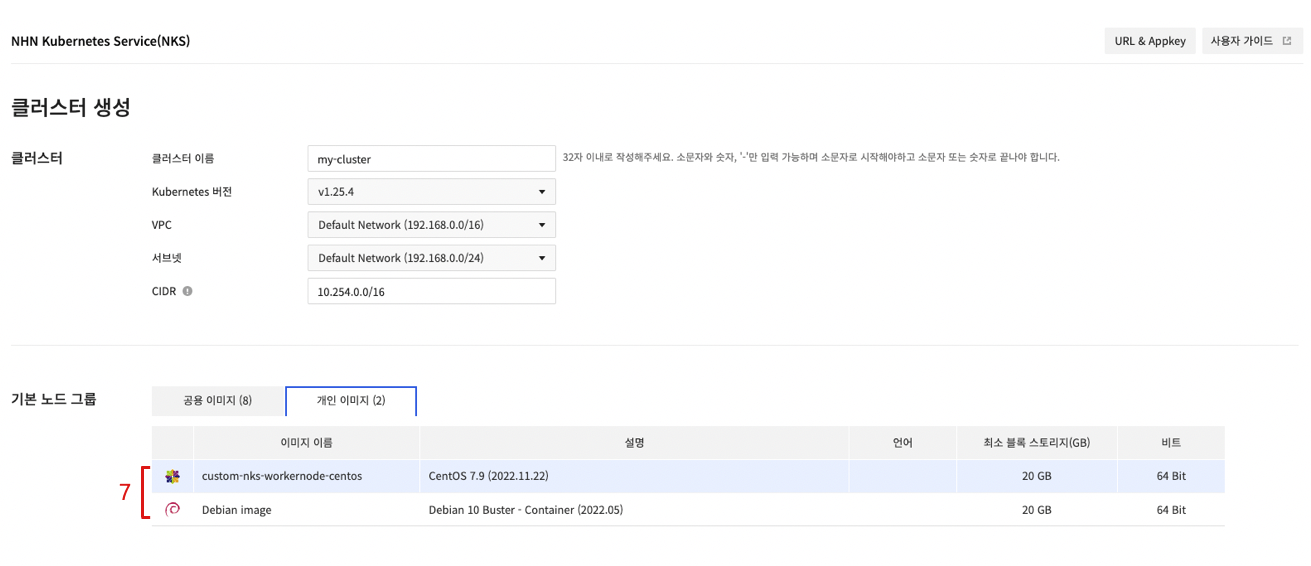

- Select the created custom image on the Create Cluster or Create Node Group.

Cluster Management

To run and manage clusters from a remote host, kubectl is required, which is the command-line tool (CLI) provided by Kubernetes.

Installing kubectl

You can use kubectl by downloading an executable file without any special installation process. The download path for each operating system is as follows.

[Caution] If you install Kubernetes-related components such as kubeadm, kubelet, and kubectl using the package manager on worker nodes, the cluster may malfunction. If you are installing kubectl on a worker node, please refer to the download command below to download the file.

| OS | Download Command |

|---|---|

| Linux | curl -LO https://storage.googleapis.com/kubernetes-release/release/v1.15.7/bin/linux/amd64/kubectl |

| MacOS | curl -LO https://storage.googleapis.com/kubernetes-release/release/v1.15.7/bin/darwin/amd64/kubectl |

| Windows | curl -LO https://storage.googleapis.com/kubernetes-release/release/v1.15.7/bin/windows/amd64/kubectl.exe |

For more details on installation and options, see Install and Set Up kubectl.

Change Permission

The downloaded file does not have the execute permission by default. You need to add the execute permission.

$ chmod +x kubectl

Change the Location or Set the Path

Move the file to the path set in the environment variable so that kubectl can be executed on any path, or add the path including kubectl to the environment variable.

- Change the location to a path set in the environment variable

$ sudo mv kubectl /usr/local/bin/

- Add the path to the environment variable

// Executed on the path including kubectl

$ export PATH=$PATH:$(pwd)

Configuration

To access Kubernetes cluster with kubectl, cluster configuration file (kubeconfig) is required. On the NHN Cloud web console, open the Container > NHN Kubernetes Service (NKS) page and select a cluster to access. From Basic Information, click Download of Configuration Files to download a configuration file. Move the downloaded configuration file to a location of your choice to serve it as a reference for kubectl execution.

[Caution] A configuration file downloaded from the NHN Cloud web console includes cluster information and token for authentication. With the file, you're authorized to access corresponding Kubernetes clusters. Take cautions for not losing configuration files.

kubectl requires a cluster configuration file every time it is executed, so a cluster configuration file must be specified by using the --kubeconfig option. However, if the environment variable includes specific path for a cluster configuration file, there is no need to specify each option.

$ export KUBECONFIG={Path of cluster configuration file}

You may copy cluster configuration file path to $HOME/.kube/config, which is the default configuration file of kubectl, if you don't want to save it to an environment variable. However, when there are many clusters, it is easier to change environment variables.

Confirming Connection

See if it is well set by the kubectl version command. If there's no problem, Server Version is printed.

$ kubectl version

Client Version: version.Info{Major:"1", Minor:"15", GitVersion:"v1.15.7", GitCommit:"6c143d35bb11d74970e7bc0b6c45b6bfdffc0bd4", GitTreeState:"clean", BuildDate:"2019-12-11T12:42:56Z", GoVersion:"go1.12.12", Compiler:"gc", Platform:"darwin/amd64"}

Server Version: version.Info{Major:"1", Minor:"15", GitVersion:"v1.15.7", GitCommit:"6c143d35bb11d74970e7bc0b6c45b6bfdffc0bd4", GitTreeState:"clean", BuildDate:"2019-12-11T12:34:17Z", GoVersion:"go1.12.12", Compiler:"gc", Platform:"linux/amd64"}

- Client Version: Version information of executed kubectl file

- Server Version: Kubernetes version information comprising a cluster

CSR (CertificateSigningRequest)

Using Certificate API of Kubernetes, you can request and issue the X.509 certificate for a Kubernetes API client . CSR resource lets you request certificate and decide to accept/reject the request. For more information, see the Certificate Signing Requests document.

CSR Request and Issue Approval Example

First of all, create a private key. For more information on certificate creation, see the Certificates document.

$ openssl genrsa -out dev-user1.key 2048

Generating RSA private key, 2048 bit long modulus

...........................................................................+++++

..................+++++

e is 65537 (0x010001)

$ openssl req -new -key dev-user1.key -subj "/CN=dev-user1" -out dev-user1.csr

Create a CSR resource that includes created private key information and request certificate issuance.

$ BASE64_CSR=$(cat dev-user1.csr | base64 | tr -d '\n')

$ cat <<EOF > csr.yaml -

apiVersion: certificates.k8s.io/v1

kind: CertificateSigningRequest

metadata:

name: dev-user1

spec:

groups:

- system:authenticated

request: ${BASE64_CSR}

signerName: kubernetes.io/kube-apiserver-client

expirationSeconds: 86400 # one day

usages:

- client auth

EOF

$ kubectl apply -f csr.yaml

certificatesigningrequest.certificates.k8s.io/dev-user1 created

The registered CSR is in Pending state. This state indicates waiting for issuance approval or rejection.

$ kubectl get csr

NAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION

dev-user1 3s kubernetes.io/kube-apiserver-client admin 24h Pending

Approve this certificate issuance request.

$ kubectl certificate approve dev-user1

certificatesigningrequest.certificates.k8s.io/dev-user1 approved

If you check the CSR again, you can see that it has been changed to the Approved,Issued state.

$ kubectl get csr

NAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION

dev-user1 28s kubernetes.io/kube-apiserver-client admin 24h Approved,Issued

You can look up the certificate as below. The certificate is a value for the Certificate field under Status.

$ apiVersion: certificates.k8s.io/v1

kind: CertificateSigningRequest

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"certificates.k8s.io/v1","kind":"CertificateSigningRequest","metadata":{"annotations":{},"name":"dev-user1"},"spec":{"expirationSeconds":86400,"groups":["system:authenticated"],"request":"LS0t..(omitted)","signerName":"kubernetes.io/kube-apiserver-client","usages":["client auth"]}}

creationTimestamp: "2023-09-15T05:53:12Z"

name: dev-user1

resourceVersion: "176619"

uid: a5813153-40de-4725-9237-3bf684fd1db9

spec:

expirationSeconds: 86400

groups:

- system:masters

- system:authenticated

request: LS0t..(omitted)

signerName: kubernetes.io/kube-apiserver-client

usages:

- client auth

username: admin

status:

certificate: LS0t..(omitted)

conditions:

- lastTransitionTime: "2023-09-15T05:53:26Z"

lastUpdateTime: "2023-09-15T05:53:26Z"

message: This CSR was approved by kubectl certificate approve.

reason: KubectlApprove

status: "True"

type: Approved

[Caution] This feature is provided only when the time of cluster creation falls within the following period:

- Pangyo region: Cluster created on December 29, 2020 or later

- Pyeongchon region: Cluster created on December 24, 2020 or later

Admission Controller plugin

The admission controller can intercept a Kubernetes API server request and change objects or deny the request. See Admission Controller for more information about the admission controller. For usage examples of the admission controller, see Admission Controller Guide.

The type of plugin applied to the admission controller varies depending on the cluster version and the time of cluster creation. For more information, see the list of plugins available depending on the time of cluster creation by region.

v1.19.13 or earlier

The following applies to clusters created on February 22, 2021 or earlier for the Pangyo region and clusters created on February 17, 2021 or earlier for the Pyeongchon region.

- DefaultStorageClass

- DefaultTolerationSeconds

- LimitRanger

- MutatingAdmissionWebhook

- NamespaceLifecycle

- NodeRestriction

- ResourceQuota

- ServiceAccount

- ValidatingAdmissionWebhook

The following applies to clusters created on February 23, 2021 or later for the Pangyo region and clusters created on February 18, 2021 or later for the Pyeongchon region.

- DefaultStorageClass

- DefaultTolerationSeconds

- LimitRanger

- MutatingAdmissionWebhook

- NamespaceLifecycle

- NodeRestriction

- PodSecurityPolicy (newly added)

- ResourceQuota

- ServiceAccount

- ValidatingAdmissionWebhook

v1.20.12 or later

All default active admission controllers per Kubernetes version are enabled. The following controllers are activated in addition to the default active admission controllers.

- NodeRestriction

- PodSecurityPolicy

Cluster upgrade

NHN Kubernetes Service (NKS) supports the Kubernetes component upgrade for the currently operating Kubernetes clusters.

Policy of supporting different Kubernetes versions

Kubernetes version is represented as x.y.z. x is the major, y is the minor, and z is the patch version. If features are added, it is a major or minor version upgrade. If it provides features compatible with previous versions such as bug fixes, it is a patch version. For more information about this, see Semantic Versioning 2.0.0.

Kubernetes clusters can upgrade the Kubernetes components while in operation. To this end, each Kubernetes component defines whether to support the features based on the Kubernetes version difference. In minor version, for example, the difference of one version supports the Kubernetes component upgrade for the operating clusters by supporting the mutual feature compatibility. It also defines the upgrade sequence for each type of the components. For more information, see Version Skew Policy.

Feature Behavior

Explains how the Kubernetes cluster upgrade feature supported by NHN Cloud works.

Kubernetes Version Control

NHN Cloud's Kubernetes cluster controls the Kubernetes versions per cluster master and worker node group. Master's Kubernetes version can be checked in the cluster view screen, and the Kubernetes version of the worker node group can be checked in the screen view of each worker node group.

Upgrade Rules

When upgrading, NHN Cloud's Kubernetes Cluster version control and Kubernetes versioning support policy must be followed to keep the proper sequence. The following rules are applied to NHN Cloud's Kubernetes cluster upgrade features.

- Upgrade commands must be given to each master and worker node group.

- In order to upgrade, the Kubernetes version of the master and all worker node groups must match.

- Master must be upgraded first in order to upgrade the worker node group.

- Can be upgraded to the next version of the current version (minor version+1).

- Downgrade is not supported.

- If the cluster is being updated due to the operation of other features, upgrade cannot be proceeded.

The following table shows whether upgrade is possible while upgrading the Kubernetes version. The following conditions are used for the example:

- List of Kubernetes versions supported by NHN Cloud: v1.21.6, v1.22.3, v1.23.3

- Clusters are created as v1.21.6

| Status | Master version | Whether master can be upgraded | Worker node group version | Whether worker node group can be upgraded |

|---|---|---|---|---|

| Initial state | v1.21.6 | Available 1 | v1.21.6 | Unavailable 2 |

| State after master upgrade | v1.22.3 | Unavailable 3 | v1.21.6 | Available 4 |

| State after worker node group upgrade | v1.22.3 | Available 1 | v1.22.3 | Unavailable 2 |

| State after master upgrade | v1.23.3 | Unavailable 3 | v1.22.3 | Available 4 |

| State after worker node group upgrade | v1.23.3 | Unavailable 5 | v1.23.3 | Unavailable 2 |

Notes

- 1: Upgrade is possible because the versions of the master and all worker node groups are matching

- 2: Worker node groups can be upgraded once the master is upgraded

- 3: The versions of the master and all worker node groups must match in order to upgrade

- 4: Upgrade is possible because the master is upgraded

- 5: Upgrade is not possible because the latest version supported by NHN Cloud is being used

Upgrading master components

NHN Cloud's Kubernetes cluster master consists of a number of masters to ensure high availability. Since upgrade is carried out by taking the rolling update method for the master, the availability of the clusters is guaranteed.

The following can happen in this process.

- Kubernetes API can fail temporarily.

Upgrading worker components

Worker components can be upgraded for each worker node group. Worker components are upgraded in the following steps:

- Deactivate the cluster auto scaler feature.1

- Add a buffer node.2 to the worker node group.3

- Perform the following tasks for all worker nodes within the worker node group.4

- Evict working pods from the worker node, and make the node not schedulable.

- Upgrade worker components.

- Make the node schedulable.

- Evict working pods from the buffer node, and delete the buffer node.

- Reactivate the cluster auto scaler feature.1

Notes

- 1: This step is valid only if the cluster autoscaler feature is enabled before starting the upgrade feature.

- 3: Buffer node is an extra node which is created so that the pods evicted from existing worker nodes can be rescheduled during the upgrade process. It is created having the same scale as the worker node defined in that worker node group, and is automatically deleted when the upgrade process is over. This node is charged based on the instance fee policy.

- 3: You can define the number of buffer nodes during upgrade. The default value is 1, and buffer nodes are not added when set to 0. Minimum value of 0, maximum value of (maximum number of nodes per node group - the current number of nodes for the worker node group).

- 4: Tasks are executed by the maximum number of unavailable nodes set during upgrade. The default value of 1, minimum value of 1, and maximum value of the current number of nodes for the worker node group.

The following can happen in this process.

- Pods in service will be evicted and scheduled to another node. (To find out more about pod eviction, refer to the notes below.)

- Auto scale feature does not operate.